When Testing Careers Feel Unstable

Staying grounded when the industry shakes

If you work in testing today, instability is real. Teams shrink, roles blur, and automation and AI are framed as replacements. Job descriptions demand everything at once, often without clarity or context.

Most testers aren’t unsettled because they lack skill. They’re unsettled because the old safety nets are gone.

This article shows how to think clearly, test effectively, and stay relevant when certainty disappears.

The fear is real, and subtle

Testers don’t fear tools. They fear losing their relevance when certainty vanishes. Specifically:

Being useful only when systems behave predictably

Needing clear instructions to feel competent

Losing credibility when scripts stop providing answers

Not knowing how to quantify “real risk”

Fear doesn’t roar. It whispers responsibility, but quietly narrows judgment. It pushes testers toward what feels safe, not what matters.

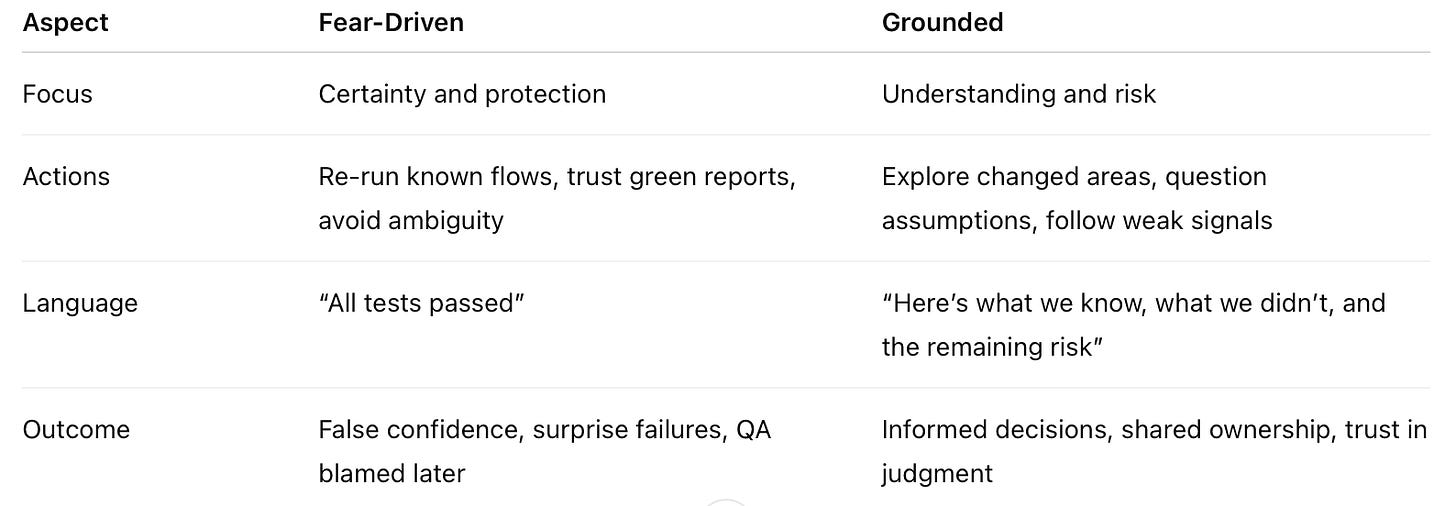

Fear-driven vs grounded testing

TESTING PRESSURE

(Deadlines, layoffs, AI, unclear scope)

|

-------------------------------

| |

v v

FEAR-DRIVEN TESTING GROUNDED TESTING

Example: Under pressure, a tester may re-run familiar flows, avoid ambiguous areas, and report “high coverage” while the riskiest changes remain untested. Nothing is technically wrong, but judgment narrows. Fear favors certainty over significance.

Release decision: fear vs grounded

Scenario: Release is tomorrow. Late changes landed today. Automation is green. Product asks, “Can we ship?”

Fear-driven response:

“All test cases passed. Automation is green. No blockers found.”

This protects the tester, hides risk, and leaves stakeholders uninformed.

Grounded response:

“We validated main payment and refund flows with real data. Retry behavior added today remains untested. Past incidents suggest this is high risk. Shipping now means explicitly accepting that risk.”

This shows clear ownership, shared understanding, and supports an informed decision.

Notice: grounded testing communicates uncertainty, not panic.

What automation and AI can, and can’t, do

Good at:

Repeating known checks, generating variations, speeding predictable feedback.

Poor at:

Interpreting ambiguous outcomes, reasoning about business impact, noticing weak or emergent signals, negotiating tradeoffs.

Concrete example:

AI might detect that a button appears or a response is 200 OK. It cannot judge whether partial refunds, network retries, or unusual sequences could silently corrupt transactions. That requires human judgment.

Enduring skills:

Exploratory testing in unclear systems

Risk analysis with incomplete information

Failure investigation without scripts

Decision support under pressure

Tip: Use AI to generate hypotheses, summarize data, and offload repetition. Don’t compete on speed. Protect your judgment.

Depth over breadth, in practice

Example exploratory session: Payment retries after network failure

Map where money could be duplicated or lost

Simulate partial failures, not just full outages

Ask what customer support would experience

Inspect logs and events, not just the UI

Document assumptions and challenge them actively

Share findings with developers. Explain why each risk matters and adjust testing based on feedback. Depth is shared understanding, not solo heroics.

Visual tip: A simple risk map listing flows, probability, impact, and coverage helps stakeholders see what is tested, what remains unknown, and why certain areas matter more.

Communicating risk without noise

Example Slack or standup update:

“Core payment flow looks stable. Retry edge cases added yesterday remain untested. Historically, these caused silent failures. Recommendation: delay or explicitly accept this risk.”

Avoid blame or panic. Show informed judgment. Highlight uncertainty. Propose decisions based on risk, not convenience.

Common pitfall:

Saying “Everything passed in automation” hides risk. When production fails silently, trust is lost.

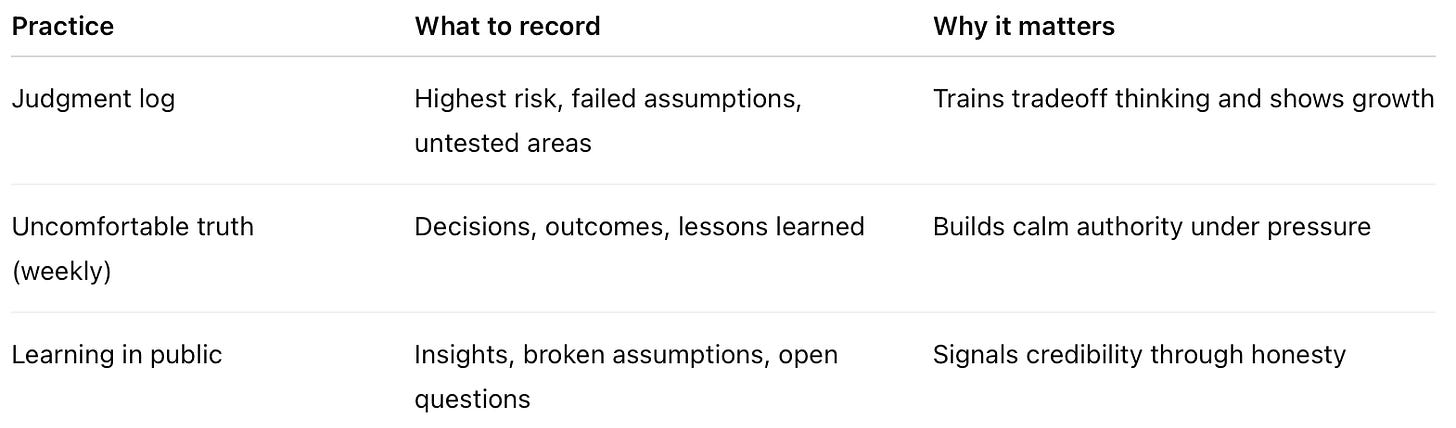

Micro-practices to build durable skill

Even small habits compound. Over time, your judgment becomes visible, resilient, and trusted.

From daily practice to career resilience

Testers who focus on judgment, risk communication, and exploration:

Interview better because they can explain decisions

Adapt faster when roles change

Stay relevant when tools rotate

Become trusted voices, not just executors

Ask yourself:

Can I uncover risks others miss?

Can I explain uncertainty clearly?

Can I support better decisions under pressure?

If yes, layoffs, titles, or tools do not define your value.

Patience over speed

Shaky times make speed tempting, but rushed moves damage careers. Poor role fit. Shallow learning. Burnout. Broken trust.

Patience isn’t waiting. It’s refusing to panic-learn and panic-decide. Clarity stabilizes careers better than motion.

Final thought

Testing is becoming more honest, not easier. Safety nets are thinner. Expectations are higher. The work is more human.

Fear is normal, but it doesn’t run the work. Strong testers aren’t fearless. They are clear-headed when certainty disappears.

Master judgment under uncertainty, and no layoff, tool, or trend can make your skills irrelevant.

A quiet reminder as the year closes.

Further Reading

If you found this useful, you might also enjoy these articles:

If you found this helpful, stay connected with Life of QA for more real-world testing experiences, tips, and lessons from the journey!